Perform Spell Check and Analyze Conversational Flair for Language Learners through Azure Services

Digital transformation and disruption have brought in a lot of interest in the e-Learning space. Digital education is now available from anywhere sitting at your home. With this disruption, the Soft Skills learning has gained quite a traction. Language Learning is one of them. If there are good apps available to learners, there are more chances they could improve their skills anytime, anywhere.

Learning a language is mostly about writing, reading, and speaking. It is also a hobby for many. Building a solution around this could be very helpful for a learner but requires a lot of machine learning capability which is both a tedious as well as a costly effort. However, the machine learning capability of cloud providers, in this case Azure, is making it easier to build solutions in a short span of time. Additionally, the extensive repository of learning and documentation on the Cloud Services makes it even more easier than ever.

The Use Case

In the context of learning a language, this application that we intend to create would check the writing and speaking capability of a learner, and suggest further improvements. The application analyses the spelling of the input text as well as help improve the conversational flair of a learner. This use case could be very useful for those who are in their initial phase of learning a language (in this case English) and want to make sure that they are writing as well as speaking correctly. Apt for first time learners of any language and kids.

Services Used

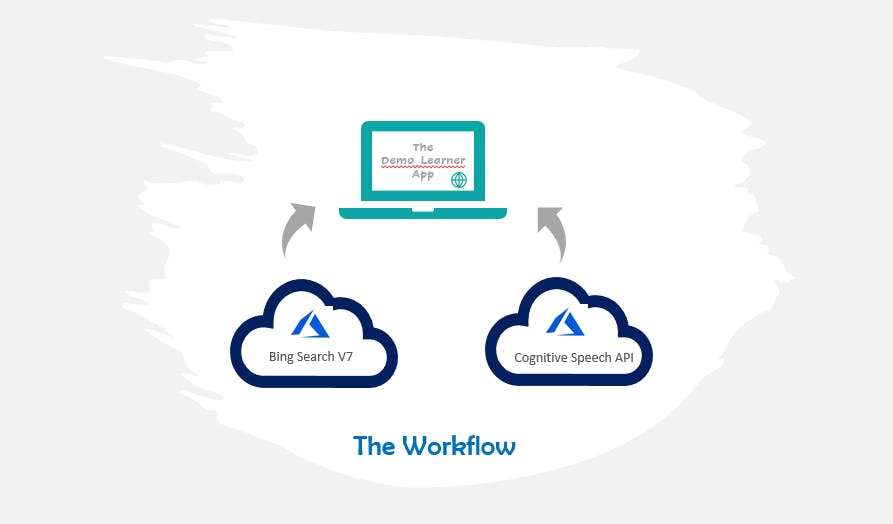

For building this application, I have used the following Azure Services:

- Microsoft Bing Spell Check API

- Azure Cognitive Services – Speech API

I have developed the code in Python and used the Microsoft Visual Studio as the IDE.

My Application

I call my application Demo_Learner App. This app will perform two actions:

- Spell Check the input text of the users.

- Check the conversational skills of the users, by asking them to speak a given sentence and then highlight the difference if any.

For the sake of simplicity, I will first allow the users to spell check their text and then move over to their conversational skills. The input as well as output language selected for this application is English.

But what does this application do?

This app will take an input text from the user and check if it is spelled correctly. If there are any incorrect spellings, it will display them as suggestion(s) with an accuracy score. Further, this application also checks the conversational flair of the users and ask them to repeat the text displayed through their microphone. Once the input from the user is captured, it then highlights the difference between the displayed text and the spoken text.

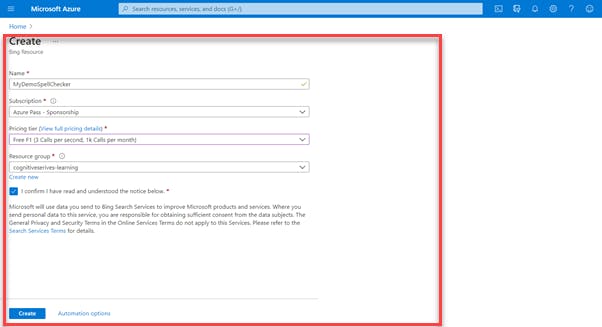

Creating the Bing Spell Check API in Azure

Login to the Azure Portal with your credentials. Search for the Bing Search V7 API in the Azure Marketplace and create the service.

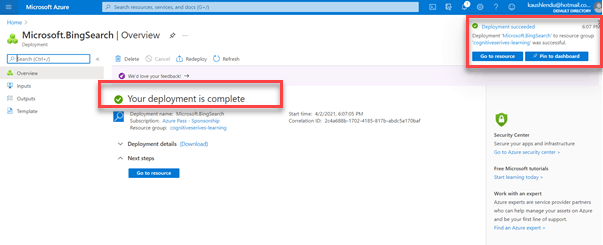

Once the deployment is successful, we will be using this service for the spell check functionality in our application.

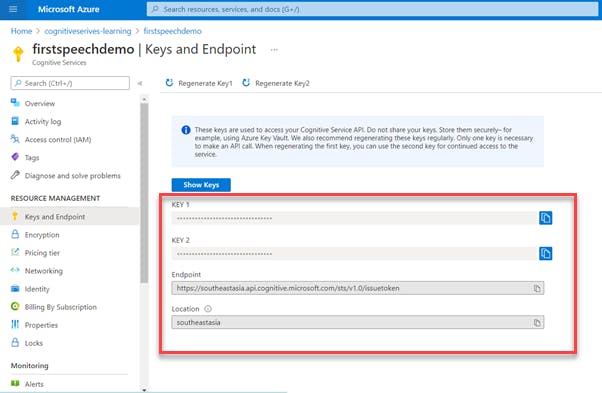

We will also note the Key and Endpoint, that will be used to call this API from our application. The Key is unique to each service and is confidential.

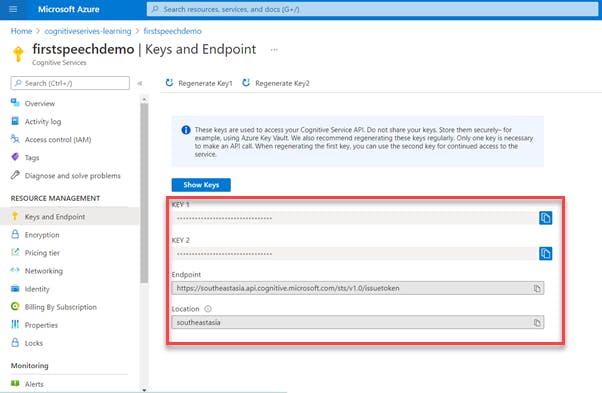

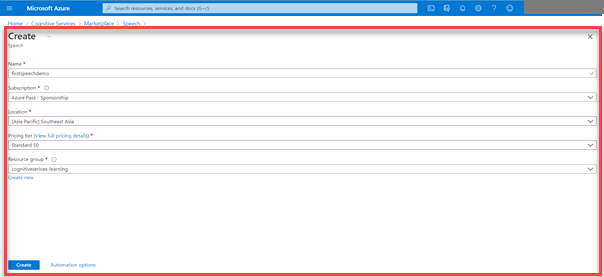

Creating the Speech API in the Azure Cognitive Services

Search for Speech in the Azure Marketplace and create the service. This falls under the Azure Cognitive Services.

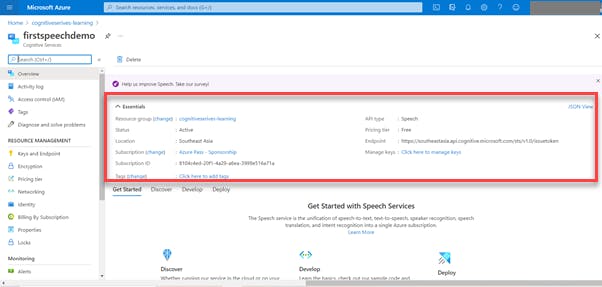

Once the deployment is completed, go to the overview section to view its details.

Note the Key and Endpoint, that will be used to call this API from our application. This is a different key from the Bing Service.

Once we have our services ready, switch to the Microsoft Visual Studio IDE and create a python repo.

For the spell check functionality, here is the code:

import http.client, json, urllib.request, urllib.parse, urllib.error, urllib

import requests

import difflib

import sys

import azure.cognitiveservices.speech as speechsdk

print("""Let us spell check your input.""")

key = "<your api key>"

demo_text = input("Please enter something: ")

endpoint = "https://api.bing.microsoft.com/v7.0/spellcheck"

headers = {

'Content-Type': 'application/json',

'Ocp-Apim-Subscription-Key': key,

}

data = {'text': demo_text}

params = urllib.parse.urlencode(

{

'mkt':'en-US',

'setLang':'EN',

'text':demo_text,

}

)

response = requests.post(endpoint, headers=headers, params=params, data=data)

json_response = response.json()

new_demo_text = (json.dumps(json_response, indent=4))

jsonData = new_demo_text

data = json.loads(jsonData)

print("""Here are the suggestions:""")

print('\n')

for token in data['flaggedTokens']:

print('Change ' + '[' + token['token'] + ']' + ' for:')

for suggestion in token['suggestions']:

print(' ' + '{' + suggestion['suggestion'] + '}')

print(' Accuaracy score is ' + str(suggestion['score']))

print('\n')

print('**************************************************************************')

print('\n')

For the text to speech functionality, here is the code:

input("Press enter to continue: ")

print (""" Now let us see your conversational skill""")

print('-----------------------------')

print('\n')

text1 = """That is a hungry elephant."""

print(text1)

text1_lines = text1.splitlines()

speech_config = speechsdk.SpeechConfig(subscription="<your api key>", region="southeastasia")

speech_recognizer = speechsdk.SpeechRecognizer(speech_config=speech_config)

print("Please speak into your microphone.")

result = speech_recognizer.recognize_once_async().get()

print('\n')

print("You spoke: " + result.text)

text2_lines = result.text.splitlines()

d = difflib.Differ()

diff = d.compare(text1_lines, text2_lines)

print('\n')

print("We have identified the differnces as highlighed: ")

print('\n'.join(diff))

Testing the application

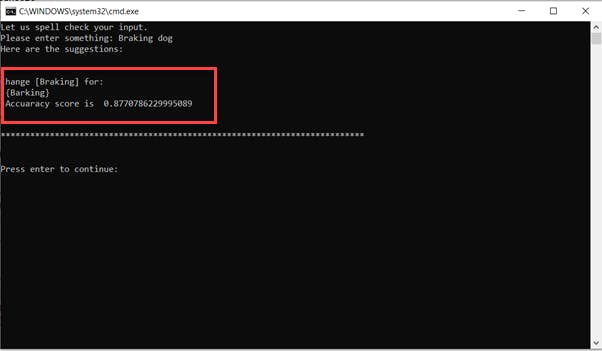

Does this application work at all? Let us Test !!!! On running the application, it asks for the input text. On providing the same, the following output is displayed:

It’s a Success!!!!

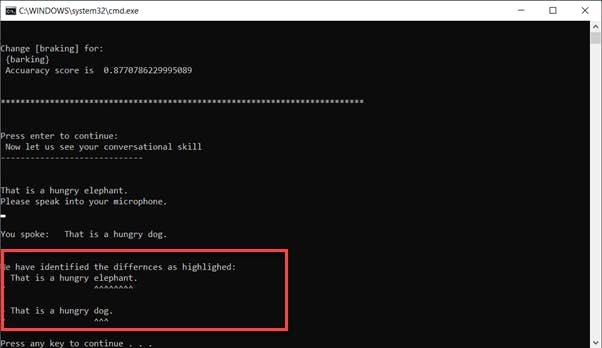

For the second aspect of the application, a text is displayed, and the user is required to repeat the conversation. The application then highlights the difference between the text and the audio. Following is the output:

It’s a Success!!!!

Future Improvements

- This app can be made fully functional with a front-end UI capability to have a better user experience.

- Right now, the spell check functionality is not taking into account the context of the input text. This can be a useful feature, once added.

- The Speech API in Azure can be further leveraged to create various scenarios for the users based on their conversational flair.

- There is a scope to bring in multi-language functionality into this app.

You can find the entire code here: Github Link